Warnings From the Queer History of Modern Internet Regulation

Chris Kryzan, who worked in tech marketing at the time, remembers this well. In 1993,

he launched an online organization called OutProud, a “Google for queers.” It featured a collection of resources for queer teens: national chat rooms, lists of queer-friendly hotlines, news clippings, and a database where kids could type in their zip codes and get connected to resources in their area. At its peak in the mid-90s, somewhere in the range of 7,000 to 8,000 kids had signed up. Yet the computer networks that hosted him, like CompuServe and to a lesser extent AOL, quickly cast his group as sexually explicit, simply because it centered gay and trans people.

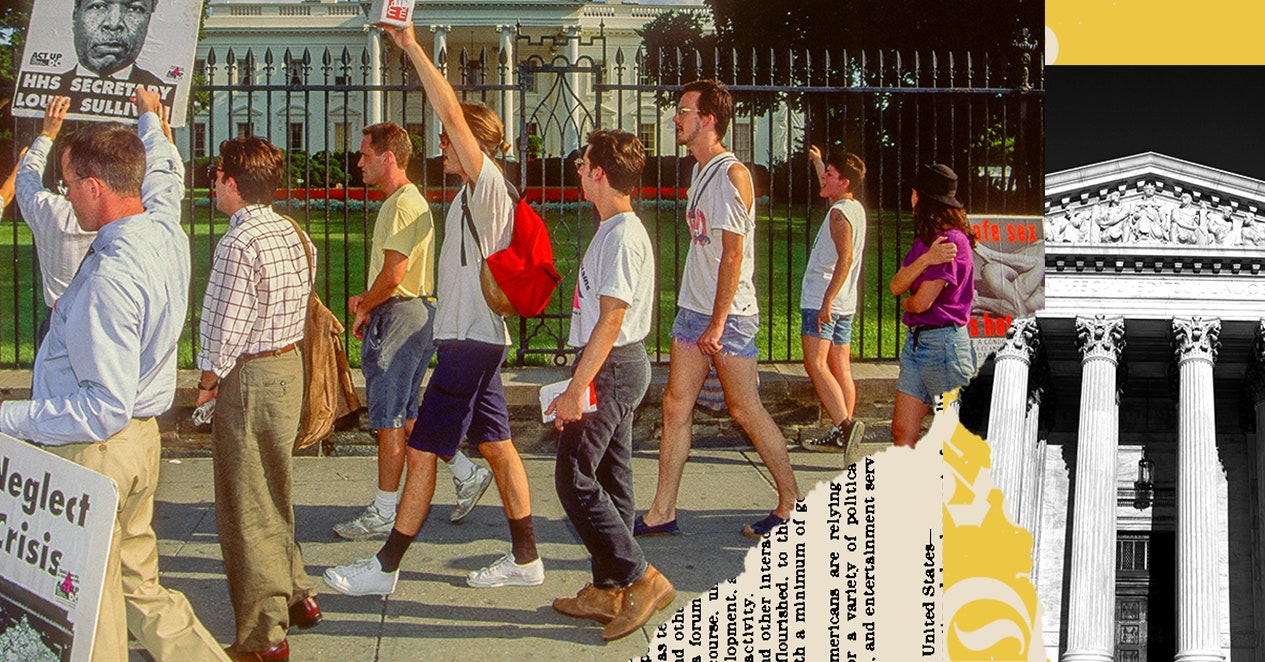

So in 1995, when Congress first began considering a draft of the Communications Decency Act, it put Kryzan’s work in jeopardy of being labeled criminal. Kryzan—along with internet-focused groups like the Queer Resources Directory—decided to fight back. Gay newspapers ran editorials opposing the law. When queer activists discovered that the Christian Coalition, a prominent supporter of the CDA, set up a phone line that would forward messages of support for the Act on to senators, queer users instead flooded it with anti-CDA calls.

As the CDA debate raged, a pair of lawmakers—Chris Cox and Ron Wyden—introduced an unrelated bill in the House called the Internet Freedom and Family Empowerment Act. The legislation responded to a controversial court case, where a bulletin board service was held liable for third-party posts because it had conducted content moderation; the judge considered the service as much a publisher of the defamatory material as the original poster. The decision seemed to suggest that service providers that took a hands-off approach would be free from liability, whereas those that moderated even some content would have to be accountable for all content. Essentially, the Cox/Wyden bill tried to encourage service providers to perform content moderation, while also granting them legal immunity by not treating them as publishers.

Eventually, in early 1996, the Communications Decency Act was signed into law. But as a compromise to the tech world, a version of the Cox/Wyden bill—Section 230—was added into it.

When the ACLU, Kuromiya, the Queer Resources Directory, and a coalition of others sued, they were able to strike down much of the CDA, including the “indecent” and “patently offensive” provisions, as unconstitutional—but Section 230 remained. In his testimony, Kuromiya showed not only that overly broad internet regulation like the CDA would endanger online gathering spaces for marginalized people, but also that a community website like his didn’t have the resources to verify user ages or moderate all content that outside users post. The latter bolstered the case for Section 230. Whereas the CDA jeopardized marginalized communities’ online presences, Section 230, even if it did not necessarily intend to protect them, at least gave them some breathing room from the knee-jerk impulses of internet service providers seeking to avoid liability.

At the time, few anticipated that Section 230 protections would soon apply to a new crop of internet behemoths like Facebook and Google, rather than small providers like Kuromiya. Yet the internet governance that lingers today came out of these clashes around sexuality and who gets to exist online.

Except for Section 230 and an obscenity provision, the CDA is no longer with us. But that doesn’t mean revivals haven’t been attempted in the decades since: Queer activists like Tom Rielly, former co-chair of the tech worker group Digital Queers, have been involved in shutting down later efforts to regulate sexuality on the internet. Rielly testified in court that a 1998 law called the Child Online Protection Act, a kind of CDA reprise, would mean the downfall of a gay-focused website he launched called PlanetOut. (COPA was later struck down.)