Samsung to Use Neural Network to Kill ‘Bad’ Pixels, Improve Image Quality

![]()

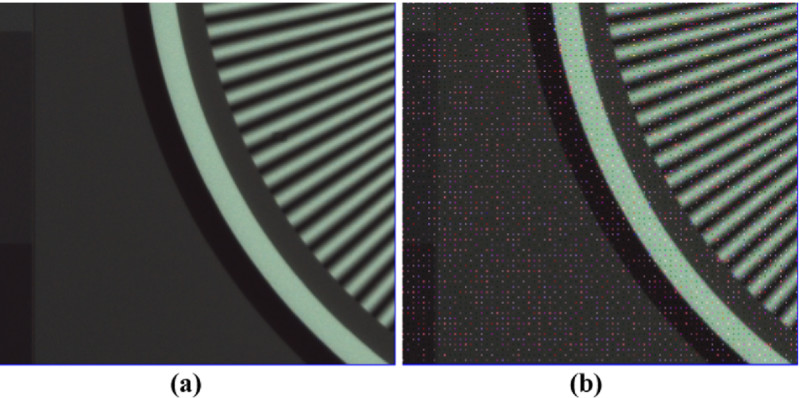

CMOS image sensors, while amazing in many ways, aren’t flawless: they are affected by many different types of noise introduction that can reduce image quality. That noise can lead to corrupted pixels — or “bad” pixels — and Samsung has unveiled a new method to get rid of them: a neural network.

Originally presented at the International Conference on Computer Vision and Image Processing, this paper by Samsung Electronics’ Girish Kalyanasundaram was recently published online and noticed by Image Sensors World>. In it, the Kalyanasundaram explains how Samsung is investigating combating “bad” pixels and sensor noise by using a pre-processing assisted neural network.

“The proposed method uses a simple neural network approach to detect such bad pixels on a Bayer sensor image so that it can be corrected and overall image quality can be improved,” the abstract reads. “The results show that we are able to achieve a defect miss rate of less than 0.045% with the proposed method.”

As the resolution of Bayer sensors increases, specifically sensors as small as those found in smartphones, they are becoming more susceptible to various types of unwanted noise. Any of the various types of noise can lead to a distortion of a pixel’s intensity and therefore a deterioration of perceived image quality.

“Such pixels are called ‘bad pixels’, and they can be of two types: static and dynamic,” Kalyanasundaram explains. “Static pixels are those with permanent defects, which are introduced during the manufacturing stage and are always fixed in terms of location and intensity. These kinds of pixels are tested and their locations are stored in advance in the sensor’s memory so they can be corrected by the image sensor pipeline (ISP). Dynamic bad pixels are not consistent. They change spatially and temporally, which makes them harder to detect and correct.”

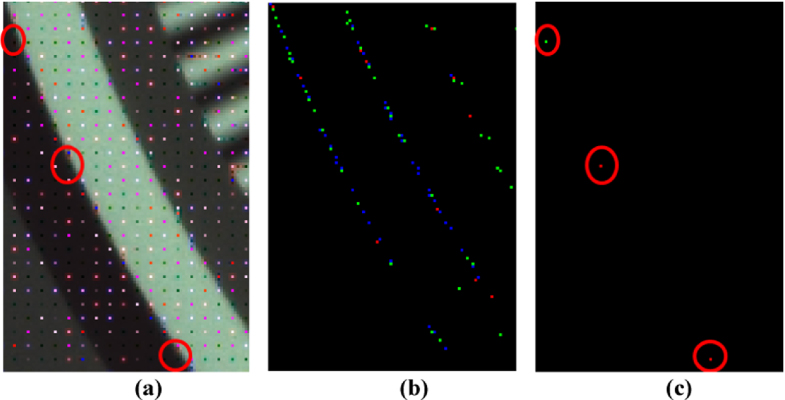

Because these dynamic pixels are constantly in flux, Samsung believes the best way to combat them is with a system that is capable of recognizing those bad pixels as they appear and proactively removing them. The researchers tried two different neural network architectures to see if the concept would work, and found that both methods performed much better in detection accuracy than the reference method, although one resulted in a higher number of false positives although it registered lesser misses compared to the other. In the reference image below, “NN” is short for Neural Network.

“The examples of misses circled in red show that the neural networks still have problems identifying some significantly bad cases, which indicates a scope for improvement, upon which current work is going on,” Kalyanasundaram writes. “Since the false positives occur around edge regions, the use of a good quality correction method can ensure that the misdetection of these pixels as bad pixels will not have any deteriorating effects after correction, especially around edge regions.”

While the details are highly technical, the concept is simple: use a neural network to predict, find, and eliminate bad pixels in image capture to allow for better overall image quality despite the high resolution that is being crammed onto the small sensors found on smartphones. It remains to be seen if this method can be rolled out to consumer devices, but on paper, the research sounds compelling.

Image credits: Header image uses assets licensed via Deposit Photos.