Samsung’s Self-Healing Sensors Are Exactly What We Need

Stuck and hot pixels will be a thing of the past with self-healing sensors.

Oh, Samsung. What could have been if you had stayed in the traditional camera market. Still, the South Korean giants have done alright for themselves since their last Mirrorless camera. They are making huge waves in the smartphone space with their camera tech. We’ve reported on several new sensors and other pieces of technology they’re working on in the past. Now, we have word of a new AI-based self-healing sensor, which could solve some issues currently plaguing all sensors if it works as intended. Head on past the break to find out more.

A report on Image Sensors World talks about a new type of sensor that Samsung is developing. In fact, it’s more of a sensor technology rather than a sensor itself. As you can imagine, it’s once again artificial intelligence that will be the star of the show here. By using neural learning networks, Samsung has created sensor tech that can detect and fix bad pixels automatically.

Self-Healing Sensors – How They Work

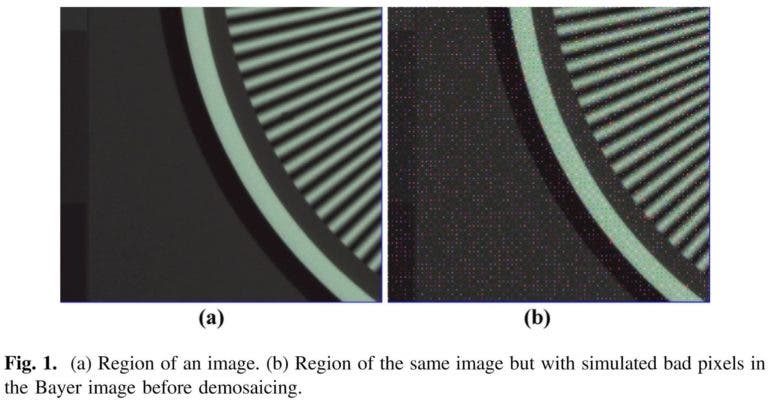

We’ve all seen bad pixels before, either in our images or even in a display. Sometimes pixels can become stuck, and they show up as either white or black dots. You can even get hot pixels, which appear red. Hot pixels still plague camera sensors today. They like to show themselves when you create long exposures and are particularly bothersome for astrophotographers.

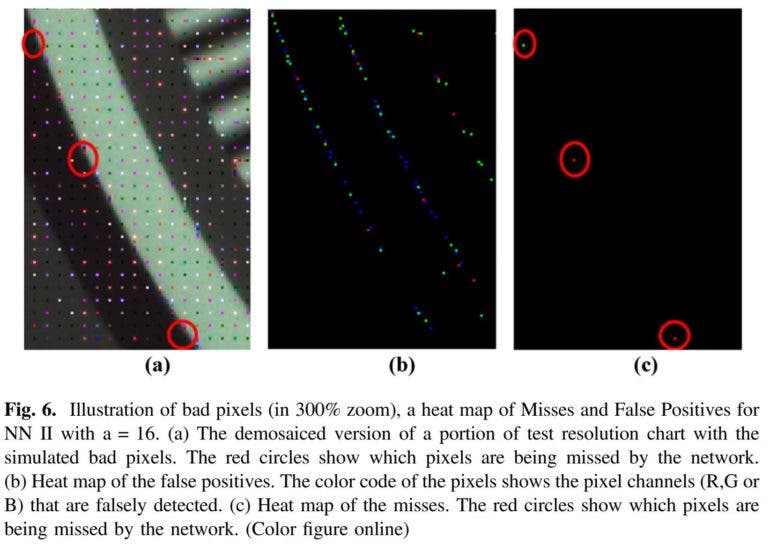

The new self-healing sensors analyze images taken by the cameras, and they will be able to detect the bad pixels and fix them. The AI will map the whole sensor to look for anomalies. After applying demosaicing, the AI will then go back to look for any pixels that it may have missed. Once the bad or stuck pixels are identified, they are taken care of. So far, studies have shown that the AI only misses 0.045% of bad pixels. That’s seriously impressive.

Artificial Intelligence Is the Way Forward

Some of you will already be familiar with technology in traditional cameras that can handle bad pixels. Both Panasonic and Olympus (now OM Digital Solutions) have Pixel Mapping (Olympus) or Pixel Refresh (Panasonic) features in their menus. Pixel Mapping/Refresh essentially does the same thing as the self-healing sensor, only it’s not automatic. You still have to see the bad pixels yourself, and you have to initiate the pixel mapping sequence and hope that it solves the problem.

Having technology implemented into traditional cameras that will take care of pixel mapping for us would be fantastic. If you’ve ever shot in low light, or as mentioned above, have done astrophotography, you know how annoying bad pixel issues can be. If AI can help us worry about one less thing, that would be wonderful. People poke fun at smartphone cameras and their technologies, but mobile photography tech is outdoing some technology found in traditional cameras. The sooner traditional camera makers wake up and actually look at what’s going on in the mobile space, the better. Hopefully, one day, we will see more AI technology work its way into our traditional cameras. We’ll be better off with it.