New York Times Launches Prototype System to Combat Misinformation

The New York Times R&D team has partnered up with the Content Authenticity Initiative (CAI) to create a prototype that gives readers transparency and authentication of news visuals in a continued attempt to reduce the spread of false information.

In 2020, PetaPixel reported that Adobe had released details on the CAI, which was founded in late 2019 in collaboration with The New York Times Company and Twitter with the aim of creating an industry standard for digital content attribution to prevent image theft and manipulation.

The difficulties of identifying false visual information consumed by the public were explored in a study by Adobe; after qualitative and quantitative research, it found that the public’s trust in images they come across is comparably low, especially when it concerns political events and news information. Although it is becoming more common knowledge that anything published online isn’t necessarily truthful, fake media can still seep into people’s lives unnoticed.

The problems for everyday news consumers to distinguish between what is real and what isn’t can cause damage in various ways. Whether that is family and friends sharing and believing in fake news presented on social media, or an individual or an organization furthering an agenda or benefiting from it financially thanks to advertising, especially if the information piece goes viral.

CAI explains, “regardless of source, images are plucked out of the traditional and social media streams, quickly screen-grabbed, sometimes altered, posted and reposted extensively online, usually without payment or acknowledgment and often lacking the original contextual information that might help us identify the source, frame our interpretation, and add to our understanding.”

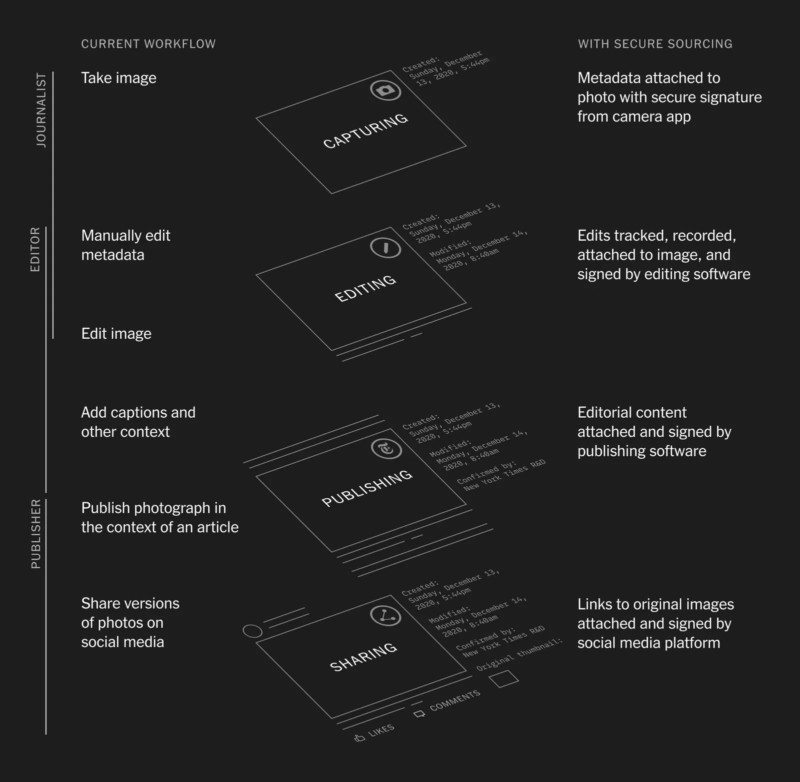

To move one step closer to a more transparent “visual ecosystem,” CAI unveiled its prototype which permits end-to-end secure capture, edit, and publish, starting with photographs and later advancing to video and other file types. Giving people a better understanding of the origin of the visual content they consume will “protect against manipulated, deceptive, or out-of-context online media.”

Called “secure sourcing,” the prototype example uses a “Qualcomm/Truepic test device with secure capture, editing via Photoshop, and publishing via their Prismic content management system.” This means that news professionals are able to digitally “sign” the work they produce and the system “codifies information such as the location and time the photo was taken, toning and cropping edits, and the organization responsible for publication.”

The aim is to eventually display a “CAI logo next to images published in traditional or social media that gives the consumer more information about the provenance of the imagery, such as where and when it was first created and how it might have been altered or edited.” Readers can then click on the icon, that signifies it is a confirmed photograph, to read more about the information behind the image.

Built upon a technology used in a previous case study, the team used early access to the CAI software developer kit and was able to display “provenance immutability” which was sealed to the images.

The success of this will help move towards a more widespread integration of this signing process into the journalistic workflow, from start to finish, especially with the help of capture partners, like Qualcomm and Truepic, editing partners, such as Adobe Photoshop in this particular case, and publishers, like the New York Times and others.

The future of a more unified visual information confirmation still has plenty of obstacles on the way, though. the New York Times R&D explains that widespread adoption of this practice is necessary, as is improved and intuitive user experience, education, and accessibility of tools, including cost, for the creators.

You can read more about the prototype and the collaborative partners, that the team plans to work with to help advance its efforts, on the New York Times R&D website, where you can also try browsing the information behind a “confirmed photograph” used for the test.

Image credits: Header photo licensed via Depositphotos.