This Brain-Controlled Robotic Arm Can Twist, Grasp—and Feel

The brain is bidirectional: It takes information in while also sending signals out to the rest of the body, telling it to act. Even a motion that seems as straightforward as grabbing a cup calls on your brain to both command your hand muscles and listen to the nerves in your fingers.

Because Copeland’s brain hadn’t been injured in his accident, it could still—in theory—manage this dialog of inputs and outputs. But most of the electrical messages from the nerves in his body weren’t reaching the brain. When the Pittsburgh team recruited him to their study, they wanted to engineer a workaround. They believed that a paralyzed person’s brain could both stimulate a robotic arm and be stimulated by electrical signals from it, ultimately interpreting that stimulation as the feeling of being touched on their own hand. The challenge was making it all feel natural. The robotic wrist should twist when Copeland intended it to twist; the hand should close when he intended to grab; and when the robotic pinkie touched a hard object, Copeland should feel it in his own pinkie.

Of the four micro-electrode arrays implanted in Copeland’s brain, two grids read movement intentions from his motor cortex to command the robotic arm, and two grids stimulate his sensory system. From the start, the research team knew that they could use the BCI to create tactile sensation for Copeland simply by delivering electrical current to those electrodes—no actual touching or robotics required.

To build the system, researchers took advantage of the fact that Copeland retains some sensation in his right thumb, index, and middle fingers. The researchers rubbed a Q-tip there while he sat in a magnetic brain scanner, and they found which specific contours of the brain correspond to those fingers. The researchers then decoded his intentions to move by recording brain activity from individual electrodes while he imagined specific movements. And when they switched on the current to specific electrodes in his sensory system, he felt it. To him, the sensation seems like it’s coming from the base of his fingers, near the top of his right palm. It can feel like natural pressure or warmth, or weird tingling—but he’s never experienced any pain. “I’ve actually just stared at my hand while that was going on like, ‘Man, that really feels like someone could be poking right there,’” Copeland says.

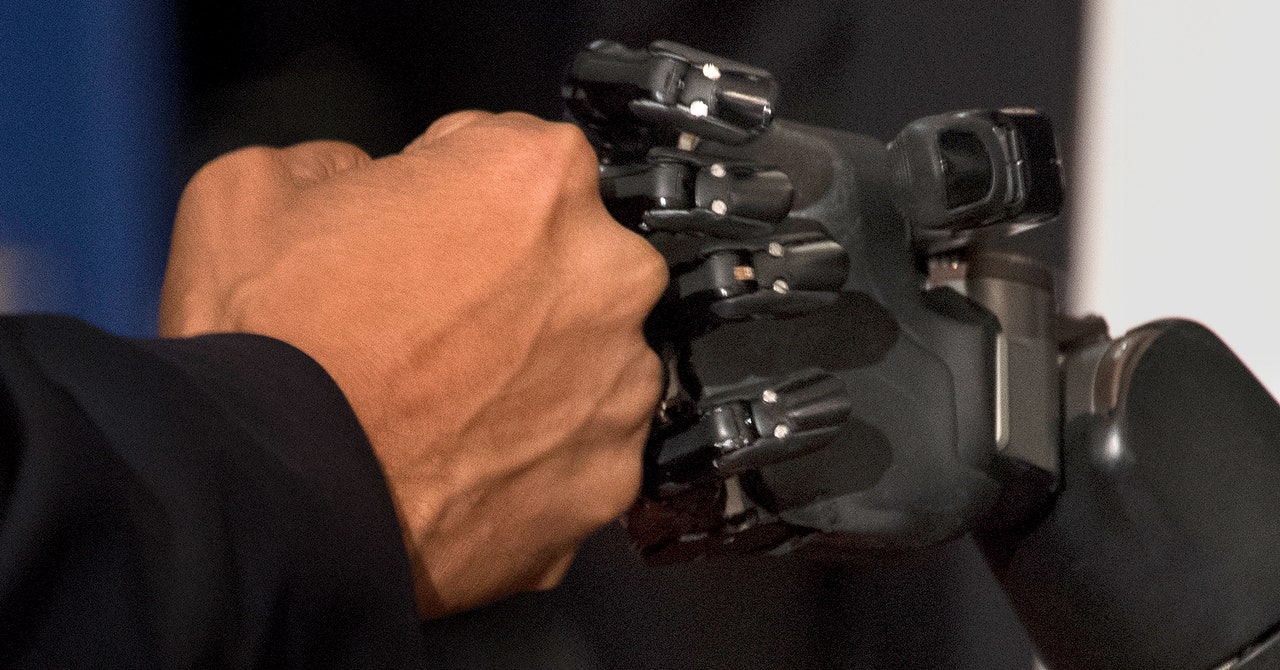

Once they had established that Copeland could experience these sensations, and that the researchers knew which brain areas to stimulate to create feeling in different parts of his hands, the next step was just to get Copeland used to controlling the robot arm. He and the research team set up a training room at the lab, hanging up posters of Pac Man and cat memes. Three days a week, a researcher would hook the electrode connector from his scalp to a suite of cables and computers, and then they would time him as he grasped blocks and spheres, moving them from left to right. Over a couple years, he got pretty damn good. He even demonstrated the system for then president Barack Obama.

But then, says Collinger, “He kind of plateaued at his high level of performance.” A nonparalyzed person would need about five seconds to complete an object-moving task. Copeland could sometimes do it in six seconds, but his median time was around 20.

To get him over the hump, it was time to try giving him real-time touch feedback from the robot arm.

Human fingers sense pressure, and the resulting electrical signals zip along thread-like axons from the hand to the brain. The team mirrored that sequence by putting sensors on the robotic fingertips. But objects don’t always touch the fingertips, so a more reliable signal had to come from elsewhere: torque sensors at the base of the mechanical digits.