Apple Shares How its Photo-Scanning System Is Protected Against Abuse

Apple has acknowledged that the way it announced its plans to automatically scan iPhone photo libraries to protect children from abusive content may have introduced “confusion” and explains how it is designed to prevent abuse by authoritarian governments.

After some heated feedback from the community at large, it appears that Apple has acknowledged it had introduced “confusion” with its initial announcement and released an updated paper on its plan to scan photos for child sexual abuse material (CSAM) on users’ iPhones, iPads, and other Apple devices.

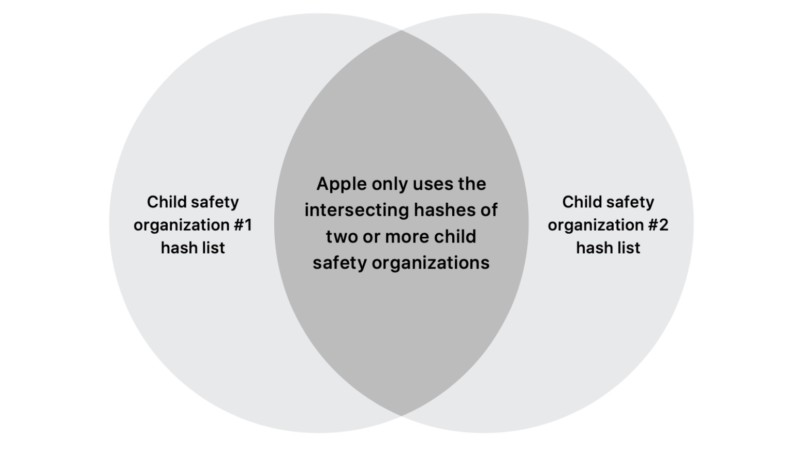

According to the updated document, Apple hopes to put any privacy and security concerns about the rollout to rest by stating it will not rely on a single government-affiliated database to identify CSAM. Instead, it will only match pictures from at least two groups with different national affiliations.

This plan is meant to prevent a single government from having the ability to secretly insert unrelated content for censorship purposes since the hashes would not match any others in the combined databases. Apple executive Craig Federighi has outlined some of the key information of this plan this morning in an interview with The Wall Street Journal

While the concept, in theory, is a good one that should be able to protect children from predators, privacy and cryptography experts have shared some sharp criticism of Apple’s plan.

The company had stated earlier that the system would use multiple child safety databases, but until this morning, how those systems would work and overlap was not explained. According to the comments from Federighi, they are still finalizing agreements with other child protection groups with only the U.S.-based National Center for Missing and Exploited Children (NCMEC) being officially named and part of the program.

The document further explains that once something has been flagged by the database, the second “protection” is a human review where all positive matches of the programs hashes need to be visually confirmed by Apple as containing CSAM material before Apple will disable the account and file a report with the child safety organization.

The paper includes additional details on how Apple will only flag an iCloud account if it has identified 30 or more images as CSAM (a threshold set to provide a “drastic safety margin” (Page 10) to avoid any chance of false positives. As the system goes into place and starts to get used in the real world, Federighi has said this threshold may be changed and adapted. Apple will also provide a full list of hashes that the auditors use to check against child safety databases as an additional step towards full transparency, to ensure it is not “secretly” matching — read: censoring — more images.

Apple additionally noted that it does not intend to change its plans to roll out the photo scanning system this fall.

For those interested, the full paper from Apple is available to read here.

Image credits: Header photo licensed via Depositphotos.